If you are reading this, you are probably already past the hype.

You have seen the demos. You have clicked through a dozen landing pages. Every AI support tool promises the same thing: automation, instant answers, and fewer tickets. And yet, if you talk to founders and support leads who have tried these tools in production, the story is usually more complicated.

Based on my experience working with real support teams, most AI support tools do not fail because the AI is “bad”. They fail because they are bolted onto broken systems.

So the real buying decision is not “Which chatbot is smartest?”

It is “Will this actually resolve customer issues inside our existing support reality?”

In this article, I will walk you through how to think about that decision; and how to avoid buying a chatbot that sounds confident but leaves your team cleaning up the mess.

1. The real decision buyers are making (even if they do not realise it)

On the surface, it looks like you are buying a chatbot.

In reality, you are choosing whether to redesign your support system around AI; or just add another layer on top of it.

Most tools focus on conversation. But customers do not contact support because they want to chat. They want something fixed, explained, refunded, updated, or escalated.

That is the first mental shift:

Resolution, not conversation

Accuracy, not confidence

Systems, not bots

Once you adopt that lens, a lot of shiny demos start to look very thin.

2. Why most AI support tools disappoint after the demo

Demos are clean. Production is messy.

In demos:

Knowledge is perfectly curated

Questions are phrased clearly

Edge cases do not exist

Escalation is a single happy-path button

In real support:

Customers ask half-formed questions

Policies live in five different docs

Answers change weekly

Humans need context when they take over

Most AI support tools struggle because they assume:

Knowledge quality magically improves over time

Adding “actions” equals solving support

AI accuracy increases just by seeing more chats

None of these are true by default.

Without a shared source of truth and clear workflows, AI simply becomes a faster way to deliver inconsistent answers.

3. The three things that actually determine success

When AI support works, it is not because of a single feature. It is because three things are designed as one system.

1. Knowledge (the boring part everyone underestimates)

Your AI is only as good as the knowledge you give it; but more importantly, how that knowledge is maintained.

What matters is not:

How many documents you upload

Which model you use

What matters is:

Is there one source of truth?

Can teams update it without breaking consistency?

Does internal knowledge match what customers see?

If your help centre says one thing, internal Slack answers another, and your AI invents a third version, trust erodes fast.

2. Workflow and actions (but grounded in reality)

Yes, actions matter. Checking order status. Creating tickets. Booking calls.

But actions without workflow design create new problems:

When should the AI act vs ask?

What happens if data is missing?

How are failures surfaced?

Support is not just “do a thing”. It is a sequence of decisions. Tools that treat actions as magic buttons often collapse under edge cases.

3. Human hand-off (clean, contextual, visible)

This is where most tools quietly fail.

A good hand-off means:

The human sees what the AI saw

The customer does not repeat themselves

Ownership is clear

If escalation feels like falling off a cliff, your team pays the price in frustration and longer resolution times.

4. A simple before/after support scenario

Let us make this concrete.

Customer question:

“Hey, I was charged twice last month and my invoice doesn’t match what I see in the app.”

Typical AI chat tool

Confidently explains billing policy

Links to a generic article

Cannot see account-specific data

Escalates with no context

Result: The customer is annoyed, and the human agent starts from scratch.

System-based AI support

Understands billing + product context

Checks account data or asks for what is missing

Explains why the discrepancy exists

Escalates with full context if needed

Result: Either resolved instantly, or escalated cleanly with trust intact.

The difference is not “smarter AI”. It is shared knowledge, clear workflow, and thoughtful escalation.

5. How to evaluate AI support tools using this lens

When you are evaluating tools, stop asking:

“What actions does it support?”

“How fast can I launch?”

Start asking:

Where does knowledge live, and who owns it?

How does accuracy improve; concretely?

What does a failed answer look like?

How does my team work with this daily?

Ask vendors to show:

A messy, real question

A failed attempt and recovery

A human taking over mid-flow

If they cannot show that, the demo is doing too much work.

6. Where platforms like Mando AI fit (as an example)

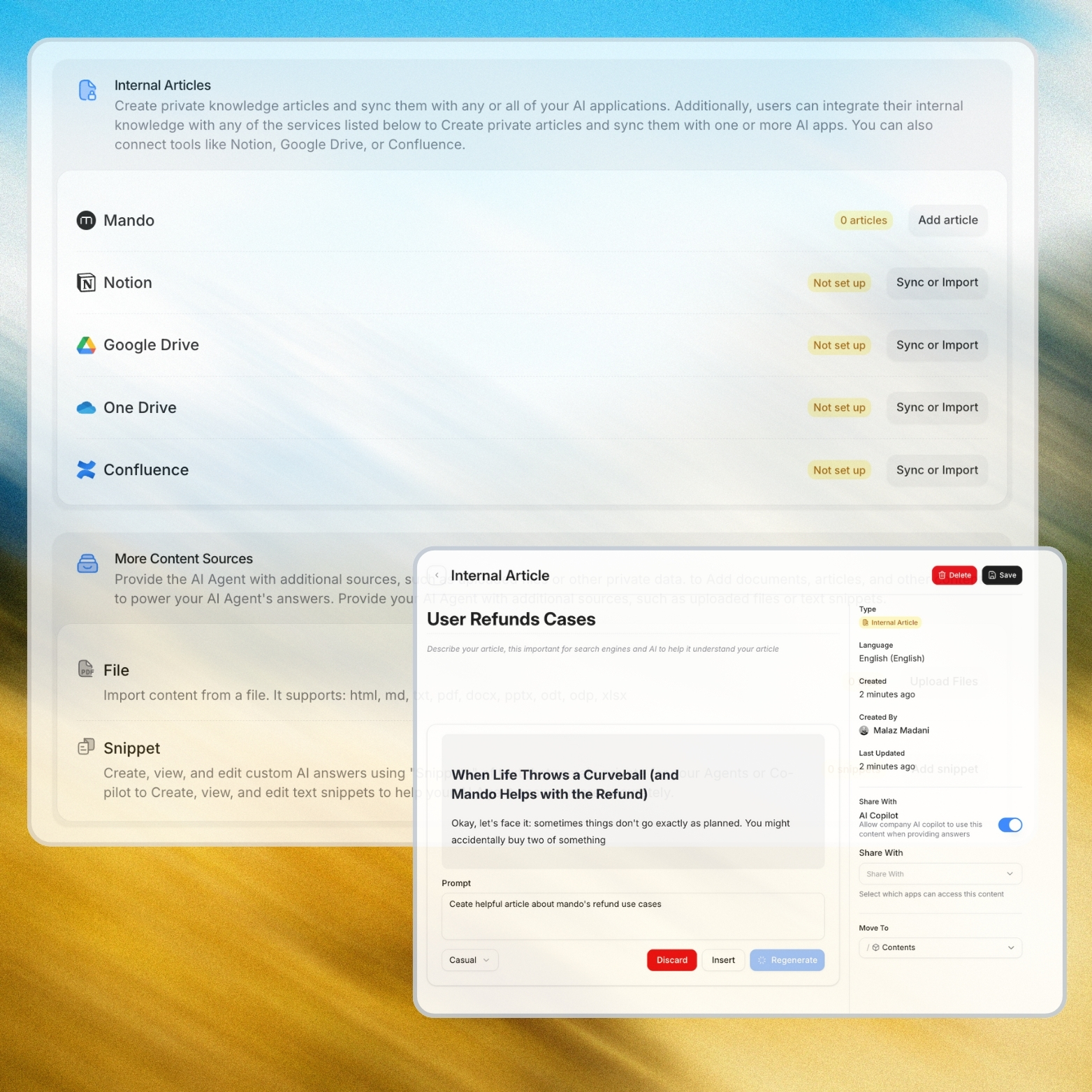

This is where platforms like Mando AI tend to show their strengths.

Not because they promise magic automation; but because they are built as support systems, not chat widgets.

The emphasis is on:

One shared source of truth for customers and teams

Consistent answers across external and internal use

Clear escalation paths instead of dead ends

That matters if your problem is not starting AI support, but making it work without creating chaos.

It is a practical reference point for teams who care more about long-term outcomes than quick wins.

7. How to know if you are ready to buy; or should wait

You are likely ready for AI support if:

You see the same questions every week

Your knowledge is mostly written down

Your team agrees on “correct” answers

You can commit to ongoing ownership

You should probably wait if:

Your support process is still undefined

Knowledge lives only in people’s heads

You expect set-and-forget automation

You are buying purely off demos

AI support rewards clarity. It punishes chaos.

Final thoughts

Most AI support tools fail quietly. Not in week one; but in month three, when edge cases pile up and trust erodes.

The real buying decision is not about chatbots. It is about whether you are ready to treat support as a system where knowledge, actions, and humans work together.

If you evaluate tools with that lens, you will immediately see which ones are built to talk; and which ones are built to resolve.

And that, in my experience, is the difference that actually matters.